Trust Issues Newsletter Archive

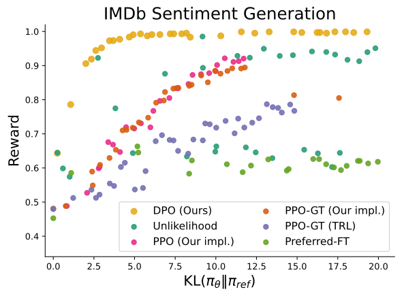

Direct preference optimization and a study on LLM factuality. Plus the White House’s executive order on trustworthy AI.

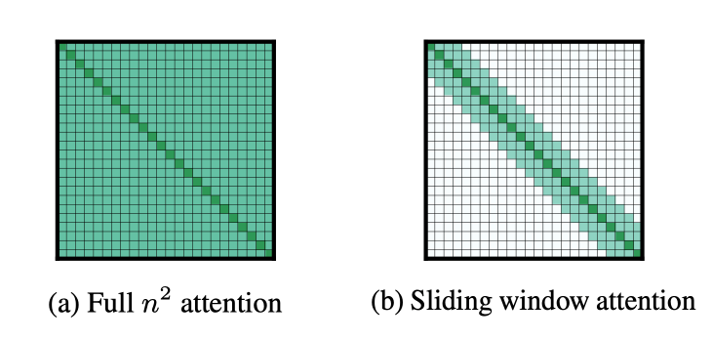

Chain of density prompting and sliding window attention. Plus Mistral’s new SOTA model and summarization evaluation in TruLens.

Influence functions for explaining LLMs, automated valuations for reducing hallucinations in ChatGPT, OpenAI fine-tuning, and federal AI licensing.

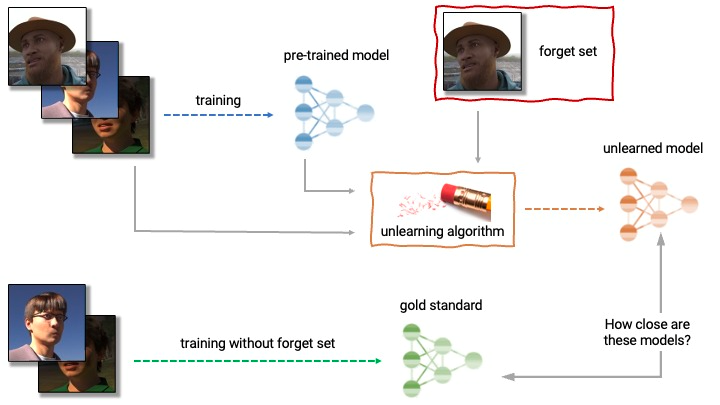

Researchers jailbreak ChatGPT, the right to be forgotten, automatic generation of LLM attacks, and machine unlearning.

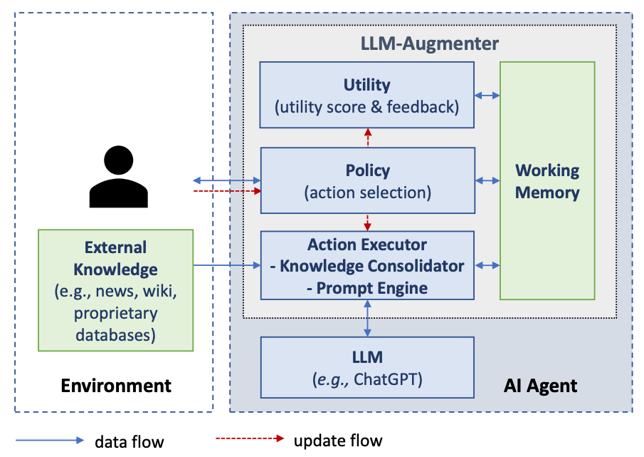

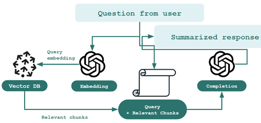

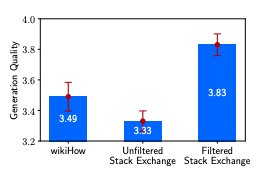

We do a deep dive into retrieval augmented generation along with a method for improving it called data importance evaluation. Also, the mode of LLM usage is changing with the rise of AI teaching assistants and the decline of ChatGPT usage.more efficient ways of training models and constructing augmented language model systems.

We explore more efficient ways of training models and constructing augmented language model systems. We also ponder upward and downward pressure on AI.

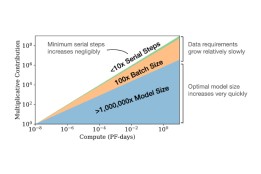

Scaling laws for LLMs, advancements in LLM adaptation, and Google/OpenAI have no moat unless regulations build them one.

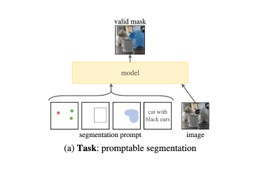

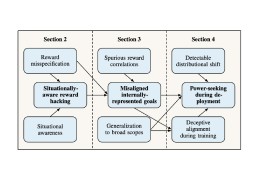

Emergent risks of generative AI, promptable image segmentation and Twitter’s open sourced recommender system - a red herring?

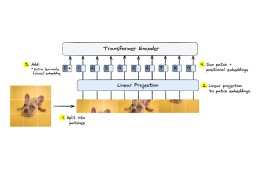

How do vision transformers (ViTs) work and a new method for explaining them with ViT Shapley. Multimodal Language Models, and the reproducibility crisis reaches Principal Component Analysis (PCA). The FTC steps in on unjustified AI claims and OpenAI releases their gpt-3.5-turbo API.

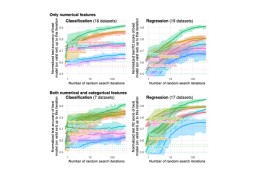

Tree-based models still outperform deep learning for tabular data, explainable AI is also a social challenge, and a failed attempt at classifying LLM-generated text.

We touch on the alignment problem in deep learning, SparseGPT reached 50-60% sparsity with negligible accuracy loss, Microsoft announces OpenAI integration in Bing, and DoNotPay announces its AI legal assistant.

The generative AI issue. We take on the societal implications of generative AI systems like ChatGPT and DALLE-2, an AI that can play Diplomacy, and touch on concept-based explanations.

What makes an explainability method for NLP good, and how do you evaluate it? Plus, StackOverflow goes offline and the hype starts to pick up for generative AI.

Robust models have smoother decision boundaries; enforcing that deep learning models are more interpretable during training can lead to benefits in performance; NYC mandates audits for AI in hiring, and the White House releases the AI Bill of Rights.

About TruEra

TruEra provides AI Quality solutions that analyze machine learning, drive model quality improvements, and build trust. Powered by enterprise-class Artificial Intelligence (AI) Explainability technology based on six years of research at Carnegie Mellon University, TruEra’s suite of solutions provides much-needed model transparency and analytics that drive high model quality and overall acceptance, address unfair bias, and ensure governance and compliance.