Berlin Meetup | 17 May 2023

Can you trust your AI model’s predictions?

Expert panel followed by cocktails and dinner

Join us for an expert discussion on the tools and methods available to build and deploy trustworthy AI models

Artificial intelligence (AI) holds tremendous potential to create value for organizations and broader society. A McKinsey study estimates that AI applications could generate $3.5 to $5.8 trillion in value per year. Yet, a tiny percentage of these companies has managed to deploy AI at scale and the issue of AI’s trustworthiness – or lack thereof – has often been seen as a key barrier. Indeed, it is now established that without proper oversight, AI may generate errors, exacerbate human bias, lead to opaque decisions or cause potential privacy issues. These challenges are magnified by the growing adoption of large language models (LLMs) and other foundation models. Indeed, these models are so massive that they are incredibly difficult to evaluate, debug and monitor at scale.

Ensuring that AI models are trustworthy is particularly critical for startup companies because opaque, poorly performing or unfair models will alienate clients, draw regulatory scrutiny and turn investors off.

How do you address these challenges? This panel of AI experts will cover:

- What are the requirements of a Trustworthy AI model

- How to test and debug an AI model to increase its trustworthiness - particularly LLMs

- How to monitor ML models at scale

The panel discussion will be followed by a Q&A – where all participants can pose questions and share their perspectives – then cocktails and dinner.

Details

- When: Wednesday, May 17 at 5:30pm

- Where: Max-Urich-Straße 3, 13355 Berlin, Germany

- What: Data science meetup with expert panel discussion, cocktails and dinner

Panelists

Lofred Madzou, Director of Strategy and Business Development at Truera, and research associate at the Oxford Internet Institute focusing on AI audit. Previously, he was an AI Lead at the World Economic Forum. He also co-drafted the French AI National Strategy.

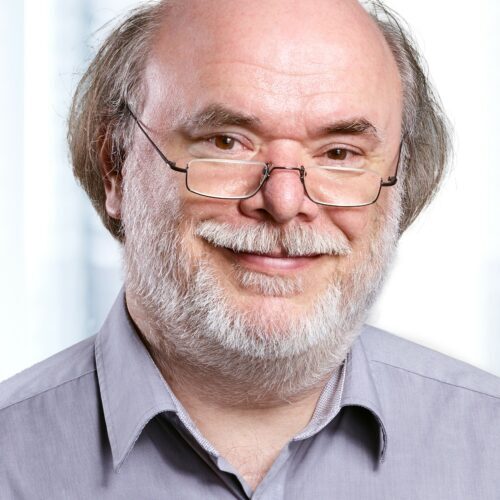

Philipp Slusallek, Scientific Director at the German Research Center for Artificial Intelligence (DFKI), where he has led the research area “Agents and Simulated Reality” since 2008. At Saarland University, he has been a full professor for Computer Graphics since 1999.

Sebastian Hallensleben is Chair of CEN-CENELEC JTC 21 where European AI standards to underpin EU regulation are being developed, a member of the Expert Advisory Board of the EU StandICT programme and Chair of the Trusted Information working group. He heads Digitalisation and Artificial Intelligence at VDE Association for Electrical, Electronic and Information Technologies.

Register for Meetup

About TruEra

TruEra provides AI Quality solutions that analyze machine learning, drive model quality improvements, and build trust. Powered by enterprise-class Artificial Intelligence (AI) Explainability technology based on six years of research at Carnegie Mellon University, TruEra’s suite of solutions provides much-needed model transparency and analytics that drive high model quality and overall acceptance, address unfair bias, and ensure governance and compliance.